This paper talked about the Locality-Sensitive Hashing(LSH) algorithm for the ANN search and improved over the former results to guarantee a sub-linear time complexity. LSH is a popular algorithm in the ANN field because it can provide tight theoretical estimates and upper bounds for many behaviours of the algorithm. This is useful if such assurance is appreciated or critical (e.g. real-time application).

The general concept of LSH is simple: if we can somehow design a hash function that could always hash close points into nearby bins, then we need only to look at a few bins when finding the nearest neighbor for one query. For different distance metrics, the design of the hash function would differ. In this paper, the author concentrate on the L1 norm and argued that L2 norm can be approximated through appropriate scaling and rotation on the data. It is interesting for me to here that but I didn't get time to read the original material. Please tell me if you can explain this :).

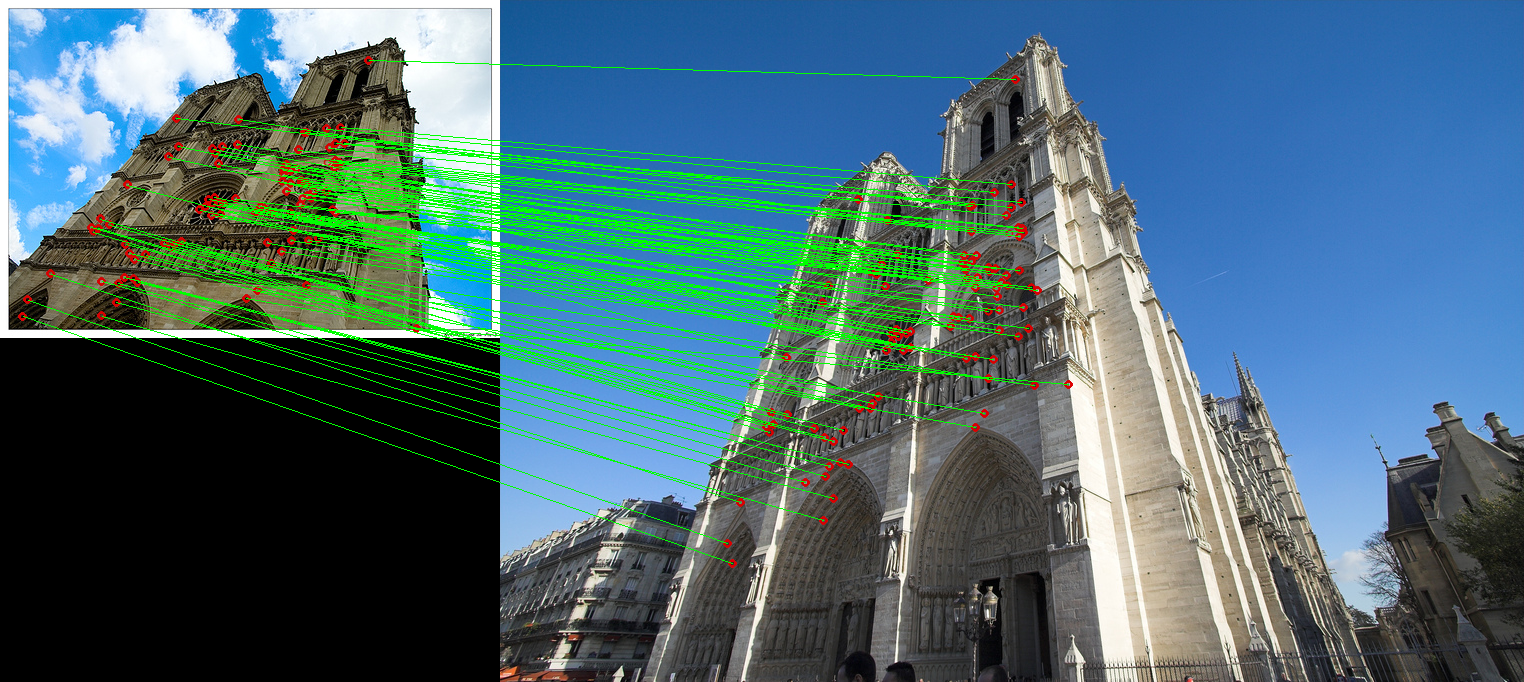

The hashing of L1 norm LSH goes in this way. We first scale the point coordinates so that the smallest seperation of each dimension exceeds one. The coordinates of each point are then discretized, "unarized" and concatnated to form a single binary vector. The LSH function would consists of several sub-functions, each sampling a number of bits from the point binary vector at pre-determined, randomly chosen positions. Each point is put into one hash bins according to the resulting value of each such sub-function. To deal with the huge number of possible bins, the bins are further hashed using standard hashing techniques. When ones need to find the nearest neighbor of a query point, they can go through the whole process and look only at the points in the bins indicated by hash functions. By careful choosing of the number of sub-functions and sampling positions, the LSH can guarantee a high probability of finding true nearest neighbor. In fact, LSH resembles histograms. The sub-functions of LSH are simply a collection of low-dimension histograms with the hope that the true NN would fall in the same cell as the query in at least one of them.

The experiments in the paper is proper. Especially, the choice of disk access measurement is brilliant because many large scale problems generates feature pools of millions to tens of billions data points, which is impossible to fill in the main memory. The question is that the error seems to climb up considerably from the first dataset to the second. I am wondering if this is a problem of LSH in dealing with large data. After all an error of 15% on the average does not sounds attractive. Another problem of LSH would be the need to determine the number of sampling position(seeing from the experiments). It is troublesome as this is not required in tree-based ANN search.