This paper is quite long and I have to admit that I didn't spend enough time on it. Perhaps it is because of the lack of background knowledge that make me hard to go ahead every sentence. I have tried to apply the first pass as described in "How to read a paper?" but it doesn't help too much... I would talk about the read materials in the following anyway.

This comprehensive survey is for the progress in the last decade in CBIR(Content-Based Image Retrieval). It talks about 1) the three design aspects in both user's and system's perspective(skipped here) 2) key techniques developed in this time interval 3) CBIR derived problems and 4) evaluation metrics for CBIR system. One word I would like to quote from the paper is that text is man's creation while images are there by itself. It is no wonder we find images much harder to drive than text.

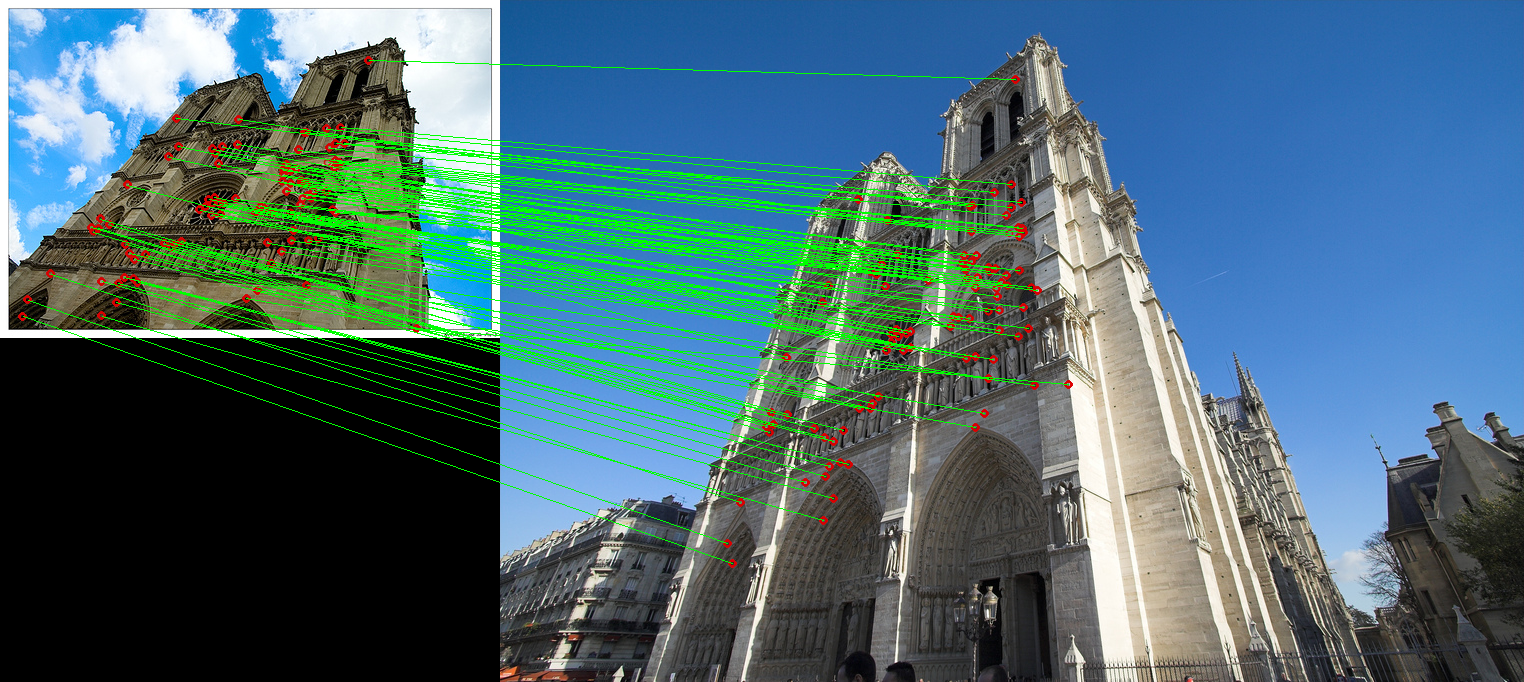

The core obstacles about CBIR is around two main problems, namely, how to express the image in a more useful formulation and how to establish similarity relationships between images. In fact, we can say that every scientific research field faces the first problem and every abstractive domain is subject to the second. The researchers in CBIR tackled the first problem by feature extraction at different levels, which the authors have found a tendency from global ones to local ones. Global features, while capture overall characteristics of the entire image, is often too cumbersome to deal with local transformations that exposed by general images. Thus, local descriptors received more and more attention in the decade. Beyond local features is the summarization or clustering, that aims to give image signatures that can help later similarity assessment, which can be viewed as "spaces" of images as a whole.

The similarity estimation seems to me much alike to machine learning methods. In this part, researchers work to find some reasonable energy functions, such that after optimization w.r.t. ground truth data, can be used to compute a similarity measure between image signatures. It comes here one can resort to pre-processing methods (clustering, categorization) or user relevance feedback to ease the burden of similarity measurements and to speed up the performance. Among those, relevance feedbacks are of distinctness, not only because it is the most direct communication with users, but also it requires a careful design to "catch" the user.

Finally, it is a novel direction to fuse informations from other medias for better content retrieval.

In the long way of CBIR it has also derived many branch fields and research topics. Those new problems include image annotation, that aims to give conceptual textual descriptions for images, pictures for story, that finds pictures best depicting a concept(reverse of the first one), aesthetics measurement, that measure aesthetic perception for images, imagery security, that exploits the limitation of CBIR system to help personality identification and many so on. It is also roughly mentioned about CBIR system evaluation at the end of the paper, to show the lack of related researches.

From the author's perspective, we should pay more attentions on application oriented aspects since it directly influence the success of any system and have their own rights to be "considered equally important". The growth of image sizes also pose a hard barrier for the future of CBIR. In any case, we can anticipate a continuing progress in this field.