This is a quite long and tough paper. The author chose a practical computer vision problem to demonstrate several inference and learning algorithms on various graphical models. This algorithms are variants of EM, including ICM, Gibbs Sampling, Variational, Structure Variantional and Max-Product BP. Finally, an experiment were introduced on small size data. The results give us some basic ideas of the algorithms' behaviour.

According to the paper, graphical models can be divided into three kinds: bayesian networks, MRF and factor graphs, with the last one subsuming the first two. By representing model parameters as random variables, we may proceed many approximate learning procedures on the model with training data. The learning procedures then each have their own properties. For example, exact EM models an acceptable amount of randomness, so given a not-too-bad intialization, it could often converge to a good solution. ICM ignores any randomness and takes greedy steps at each possible update. As a result, its solution is usually the worst. Belief propagation uses a simple updating machenism, but as a contrary, it could produce satisfying results on many problems sufficiently fast.

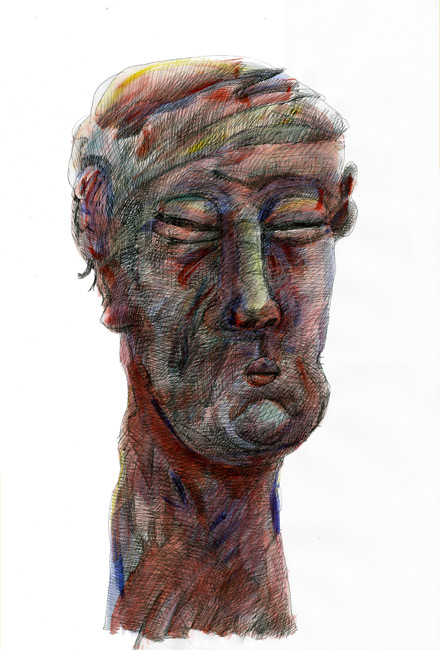

The experimental result of exact EM method on the occlusion problem is truly astonishing. It is hard to believe such quality could be achieved without introducing smoothness prior or initial bg/fg priors. I am wondering how it would turn out to be if such elements were put into play. In any case, this paper does serve as an excellent reference of probabilistic inference and learning methods on graphical models.

沒有留言:

張貼留言